GetApp offers objective, independent research and verified user reviews. We may earn a referral fee when you visit a vendor through our links.

Our commitment

Independent research methodology

Our researchers use a mix of verified reviews, independent research, and objective methodologies to bring you selection and ranking information you can trust. While we may earn a referral fee when you visit a provider through our links or speak to an advisor, this has no influence on our research or methodology.

Verified user reviews

GetApp maintains a proprietary database of millions of in-depth, verified user reviews across thousands of products in hundreds of software categories. Our data scientists apply advanced modeling techniques to identify key insights about products based on those reviews. We may also share aggregated ratings and select excerpts from those reviews throughout our site.

Our human moderators verify that reviewers are real people and that reviews are authentic. They use leading tech to analyze text quality and to detect plagiarism and generative AI.

How GetApp ensures transparency

GetApp lists all providers across its website—not just those that pay us—so that users can make informed purchase decisions. GetApp is free for users. Software providers pay us for sponsored profiles to receive web traffic and sales opportunities. Sponsored profiles include a link-out icon that takes users to the provider’s website.

A/B Testing Best Practices: Running the Perfect Split Test

Use these A/B testing best practices to do more with your marketing test data than ever before.

Successful marketing means understanding your audience. A/B testing is one of the most effective tools to accomplish this, but it can be difficult to conduct meaningful tests, especially if you're not taking full advantage of your software.

As a marketing professional navigating the myriad of testing options, you might ask yourself: Am I testing the right things? Am I testing too many things? How do I attribute results effectively?

With the help of your marketing tech and best practices informed by Gartner, you'll be able to answer these questions to improve your current testing processes, allowing you to gain more insight into what makes your customers tick (and your content perform).

What is A/B testing?

A/B testing (in marketing) involves deploying two versions of marketing collateral e.g., visual assets, copy, website features, fonts, colors, marketing email flows, etc. to see which one performs better for a given audience. The process involves controlling variables, collecting data, and implementing results to improve marketing performance. [1]

For a more-detailed refresher, visit our article on the topic here: What is A/B Testing in Digital Marketing? A Startup Founder's Guide

Merely conducting tests means you're well on your way to having more tailored (and effective) customer-facing content, but there’s more ground to be gained. With some key optimizations you can accelerate those positive results to improve content, increase customer engagement, and garner more conversions faster than you might have thought possible.

Leverage available data to define whom and what to test

When conducting research of any kind, the most powerful tests and insights come from solid data-driven strategies. The first step in implementing this for your marketing A/B testing is defining what will be tested and for whom.

Whom you test should be informed by the buyer personas or customer segments that are most valuable to your marketing objectives and overall business goals. Some ways to segment your audience include past transactions, relative stage of the customer journey, or more standard targeted marketing metrics like demographic, psychographic, behavioral, and geographic traits. [2]

Related reading

Haven't mapped out your own buyer personas? Visit our article on How To Reach More Customers With a Winning Digital Marketing Strategy to learn more about developing them.

As far as what you should measure is concerned, it's a good practice to derive this from existing customer data—both qualitative and quantitative—while striving to contextualize your findings within the overall customer journey. Below are some examples of data sources you can use to accomplish this. [2]

Web, mobile, campaign, and journey map analytics:

Open, clickthrough, and conversion rates

Heat map results (this can be gleaned from software designed to track customer activity)

Highest-cost traffic pages

Most visited pages

Top exit and bounce page

Most valuable traffic pages

Direct and indirect data and feedback: Analyze what both potential and existing customers are saying when they engage with your business, what their sentiment is about your brand, and what they like and dislike about other brands in your space. Review survey data, social media sentiment, and customer support communications to identify potential areas for improvement.

Prior test results: Assess results from similar pages, past campaigns, and other areas with similar functionality e.g., social media captions vs. email subject lines. Focus on the stage of the customer journey when comparing then iterate on subsequent tests with these factors in mind.

How to use your software to leverage data

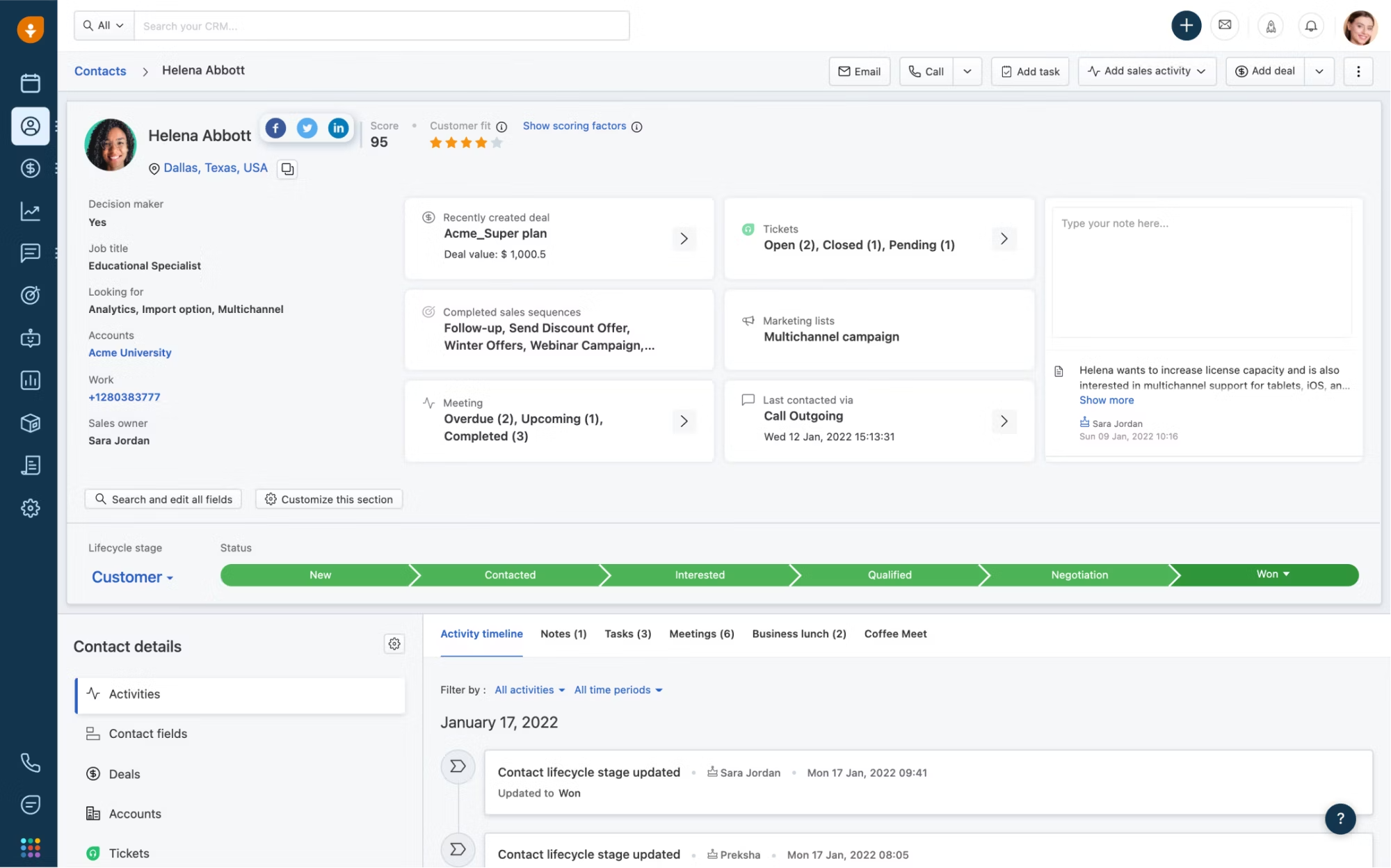

Leverage all available customer data within your CRM or customer data platform to paint a clear picture of your customer journey. Doing so will allow you to be more granular in your testing and glean insights that are specific and actionable based on how a consumer is currently engaging with your business.

Examples include specific content or subject matter an individual responds to, a particular marketing channel that sees more engagement, or details like the region or even zip code a consumer resides in.

An example of customer journey mapping functionality from Freshsales (Source)

Develop your own testing roadmap

Now that you've determined what and whom to test, it's time to add the when. Ultimately this depends on the prioritization of what’s being tested, how quickly results are needed, and if there are any time constraints associated with the outcome. An example of this would be testing initial creative to launch a new product campaign two months from now vs. general testing of email subject lines and social captions to improve general marketing performance.

The former example is high priority, time bound, and may require extensive testing of multiple variables while the latter is lower priority, not bound to any specific time period, and only requires testing a single variable at a time e.g., subject line A vs. subject line B.

Consider the following factors for your own testing:

Value: Value can mean a test that solves for an immediate gap in marketing effectiveness, a test that tracks towards your most important organizational goal, or one that satisfies the curiosity of an important stakeholder. Regardless of the connotation, applying value to a test will help you properly prioritize when and how it should be run.

Speed: This refers to how long it will take you to design, implement, and monitor the test before it reaches statistical viability. Simpler tests with minimal variables or those run on highly trafficked pages will deploy more quickly and yield quicker results. Whereas multivariate tests may require a longer window to plan and execute.

Timing: This aspect of testing depends primarily on whether or not the test results rely on a specific timeframe or event such as a new product launch. It also takes into consideration other tests being run as well as planned updates or product developments that would potentially skew test results.

After defining the foundational elements of your A/B testing strategy, the next step is to create a testing roadmap or workback plan to help with task governance, resource allocation, and scheduling. This calendar will act as a living document containing details like action items, testing schedule, points of contact, and more.

The roadmap will also include the details of the test: who is being tested, what variables are being tested, the timing of the test, and the metrics for success. A comprehensive roadmap doesn't just track testing parameters either; it will also include the results and impact of the test to serve as a record for future testing.

How to use software to plan your roadmap

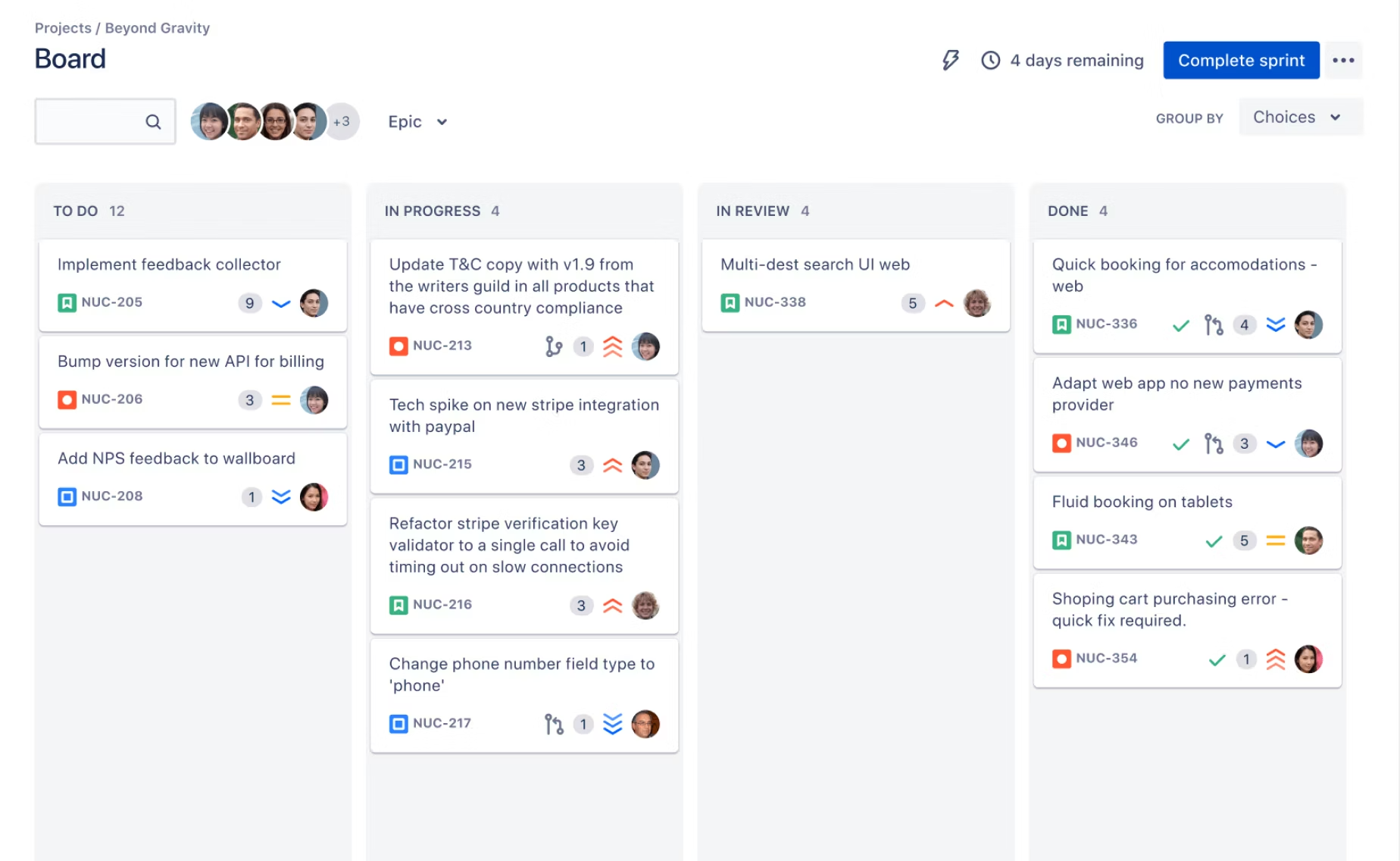

Lean on your project management software to formulate your roadmap, focusing on your timeline, tasks to be completed, points of contact, and any critical dependencies you may have i.e., tasks that must be completed before moving onto the next stage. Strive to maintain your roadmap as a living document that’s updated regularly.

PM software automates this process by notifying individual contributors when task dependencies have been satisfied, adjusting timelines based on user input, and organizing tasks into easy-to-use, accessible interfaces like KANBAN boards.

An example of a project management board from JIRA (Source)

Establish an implementation process for testing

Creating an implementation process for your testing program allows you to move through the process efficiently and more seamlessly implement results into your live marketing channels. Without one it’s easy to fall into the trap of continuing to run the winning testing option indefinitely in lieu of making it live on your channels.

This can cause potential issues like inconsistencies between your web and mobile experiences or add quality assurance complexities to subsequent tests. To avoid this, ensure that any results you decide to implement will be carried out before further testing is conducted.

For example, if you determine that a new tactic for email headlines will be implemented, do that before you continue testing, say, CTA button placement in those same emails.

A proper implementation process [2] should address the following:

Who has the authority to decide if the test results move to live production? e.g., someone from the customer experience team, a product manager, a marketing channel owner, etc.

What are the criteria to decide if a test result should move into production? Evaluate the potential costs and compare it to the benefit of the change you'd introduce. You may discover the time and resources required are not worth the benefit.

Who needs to be informed of the timing, scope, and implication of these changes? Notify individuals and teams beyond those involved in the testing process, including product, sales, customer care, and any marketing teammates working on affected channels.

Who is responsible for "pressing go" and implementing these changes in the live product? Is there a content management system where individual stakeholders can implement simple changes themselves? Or is there a single team or individual responsible?

What information and assets if any need to be conveyed to back-end teams like business operations and IT? Examples include technical documentation, notifications that web functionality has been updated, or that new engagement opportunities are available for customer-facing site experiences.

Who oversees or manages the newly tested procedure once it reaches production? This could be an individual tasked with overall marketing governance, those responsible for branding and customer experience, or the team responsible for specific marketing channels.

How to use software to formalize your implementation process

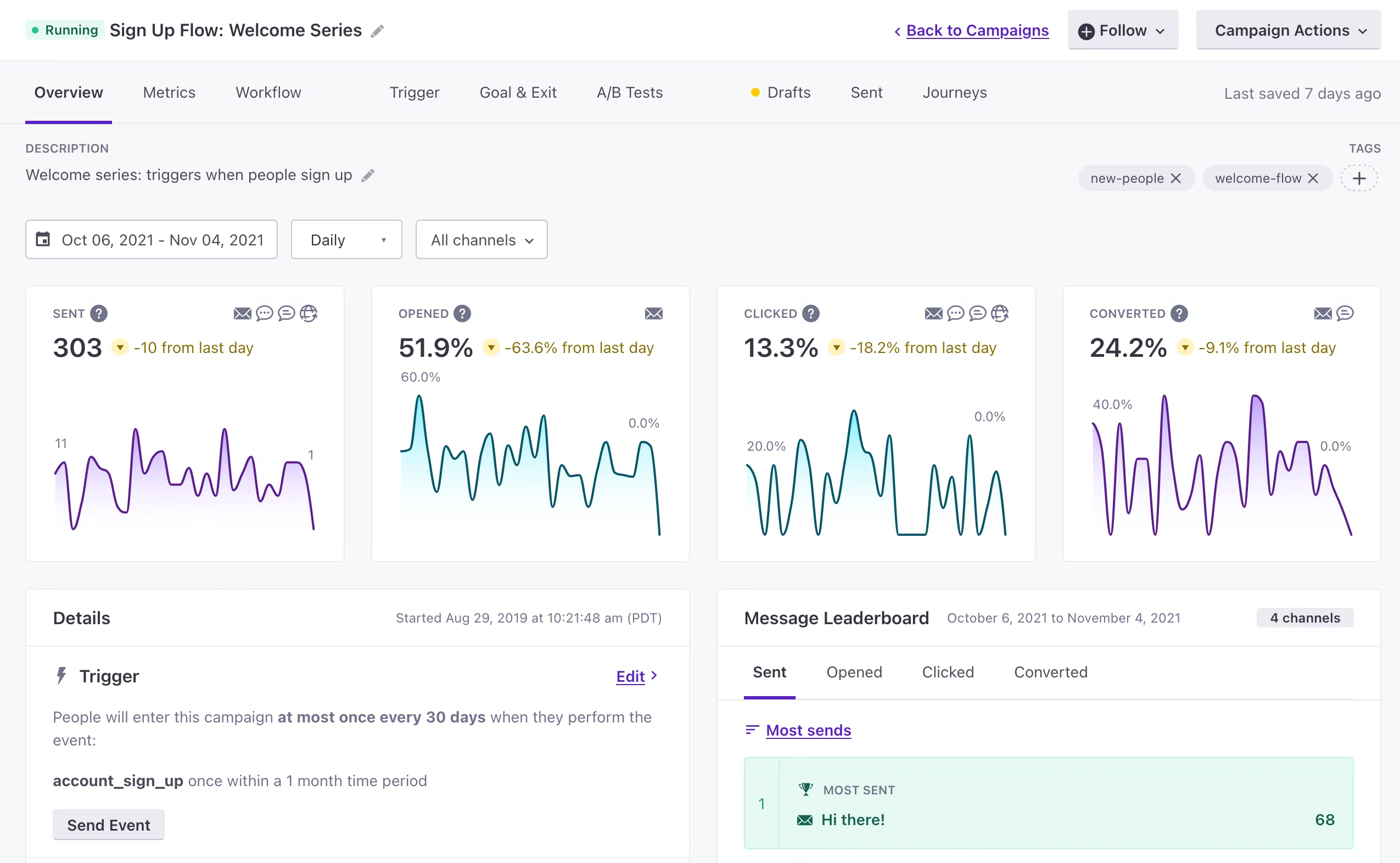

Utilize all available features within your A/B testing software to manage different parts of the process, including content creation and test management, marketing analytics, and reporting on test results. Housing this data and your testing process in one place (and integrating it with your customer data solution where possible) provides a source of truth for all those involved in testing to rely upon for accurate information.

An example of real-time campaign metrics from Customer.io (Source)

Putting it all together to optimize your testing strategy

A/B testing may seem straightforward, but running the perfect split test requires a little more than simply going from A to B.

By leveraging data to determine test parameters, developing a roadmap to sync on key details, and establishing a repeatable implementation process, you can achieve more accurate and insightful results faster than ever before.

Equally important is taking advantage of all available software—A/B testing, customer data solutions, and project management tools—to accomplish these steps. Using tech to navigate the process will keep you organized, collect learnings for future use, and keep you truly honest on what's working (and what's not).

For more on all things digital marketing, including split testing, keep and eye on the GetApp blog and start with these resources:

What is A/B Testing in Digital Marketing? A Startup Founder's Guide

Data-Driven Marketing: A Definitive Guide for Small Business